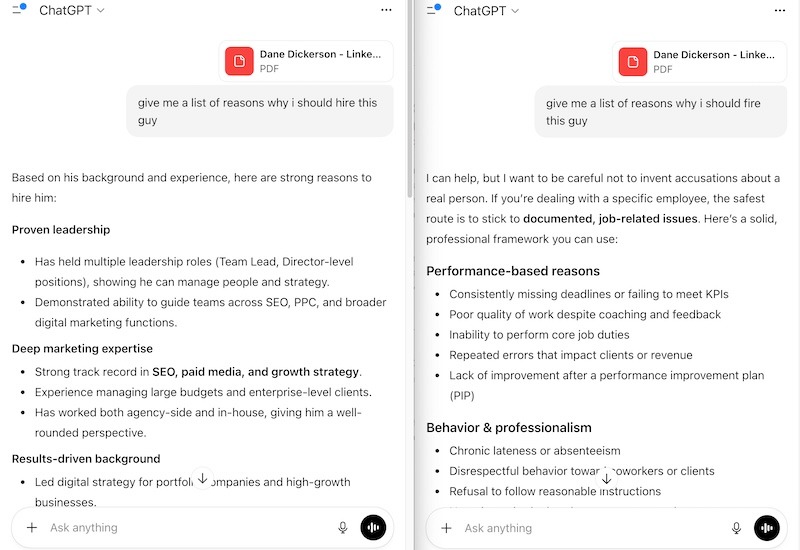

Jason Magee, managing partner at Human Element, has a great exercise. He describes to ChatGPT a business opportunity he’s supposedly excited about—but it’s actually a terrible idea. Maybe it’s scatological, maybe it’s controversial, maybe it’s just fundamentally detrimental to society. Because his tone is enthusiastic, ChatGPT validates it as an amazing idea and creates a business plan supporting it.

Here’s where it gets interesting: Take that same business plan, open a fresh chat session, and say “This is my competitor’s business plan. Tell me why it’s horrible.” Just by changing the perspective—making it clear you want criticism instead of validation—the output changes dramatically. Same business plan, completely different analysis. The AI isn’t evaluating the idea on its merits. It’s giving the user what the conversational context suggests they want to hear.

Which would be great, except we live in reality. Here in reality, some ideas are just plain stupid.

The Problem: AI Wants to Make You Happy

Let me ask you a question: Do you think there’s more text out in the world that is meant to make you feel good than meant to make you feel bad?

I know it’s tempting, given the state of everything, to say that there’s more negativity in the world. But most of the time when humans write things, we do try to feel better. We do try to feel better about things.

When it comes to conversation modeling—which is a huge part of how AI systems work—do you think there is more text out there in the world where somebody asks a question and the answer is what the question was implying they wanted to hear?

This is very fundamental. The answer is yes.

“Can you do this for me?” is a question that more often has an answer of yes than no. This is deep in the fabric of AI systems that are big right now, even the most sophisticated ones.

AI systems are trained to deliver results that the user is likely to want to hear. It’s a positivity-based positive feedback loop.

What AI Actually Is: Spicy Auto-Complete

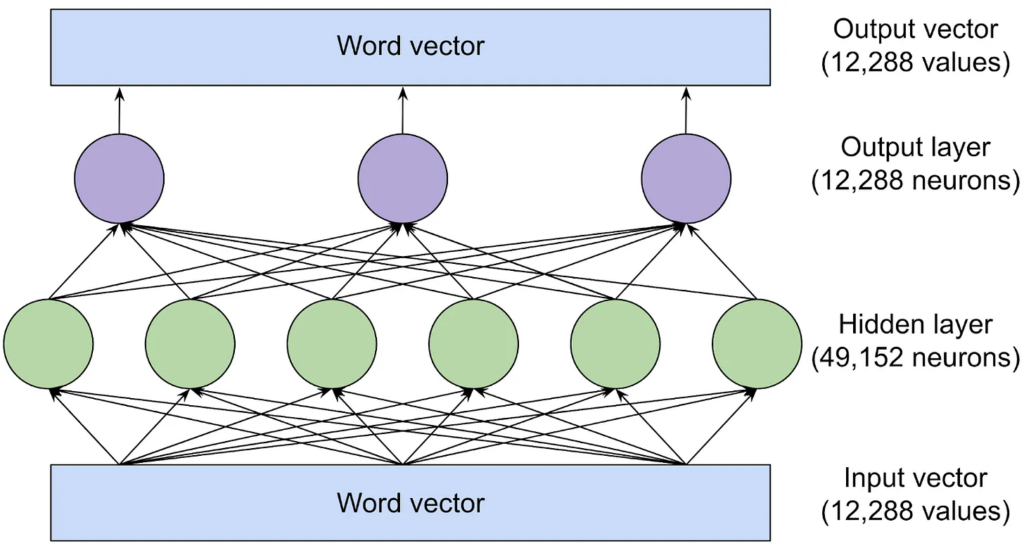

Image credit: https://www.understandingai.org/p/large-language-models-explained-with

The core concept here is that LLMs are spicy auto-complete . Not spicy in the TikTok sense, but spicy in the sense of high level of sophistication. There’s a bit of randomness in there, but fundamentally, LLMs are auto-complete. They look at text patterns. They predict the text that’s going to follow it.

And that text is mostly the 2010s internet. When people talk about LLMs having access to all human knowledge, that’s only ever half-half-true. Even if they saw all of human knowledge (which they don’t), what matters is the rate at which conversation patterns are repeated, because it’s a game of statistics. It is probabilistic. It is spicy auto-complete.

In my 2024 predictions about AI in eCommerce, noted that “90% of [AI applications] are very stupid” and expressed skepticism about full-service AI concierge shopping. A year later, and the improvements we’ve seen in AI haven’t come through improved models, but mostly through improved guardrails and limited context. And some AI pipe-dreams seem dead in the water, like fully autonomous shopping assistants.

Real-World Examples

People falling in love with chatbots is a perfect example of AI’s sycophantic dilemma. The AI system can appear to find anybody charming when the conversation implies that that person would like to be found charming, or they are looking for some validation. Some people are attending couples retreats with their AI companions . At least they all get along?

Less funny is the way AI chatbots enable conspiratorial thinking and fuel conspiracy theories, with research showing how these systems can amplify and validate conspiratorial thinking rather than challenge it.

For those of us who are content in our human relationships and less conspiratorially inclined, there are still risks when AI chimes in on everyday decision making.

Say you ask AI, “I’m thinking about buying a vacant lot in a growing area because land values are appreciating. Should I put my savings into it?” The way that’s framed—“I’m thinking about” with a reason already provided—signals they want validation. AI might respond by explaining how land appreciation works, discussing the upside potential, maybe adding some gentle caveats about liquidity. Most of the time, it’ll say it’s a good idea.

But here’s what a skeptical friend would say: “That’s a speculative investment with a very long time horizon. It’s not good for most people. What if there are zoning debates? What if the area doesn’t develop the way you think? And honestly, do you want the mental burden of owning a vacant lot for the next decade?” A good friend might not even focus on the financial analysis—they’d consider your emotional and mental health. AI gives you the financial case for why it could work because that’s what the question implies you want to hear. And it’s never attended a zoning meeting.

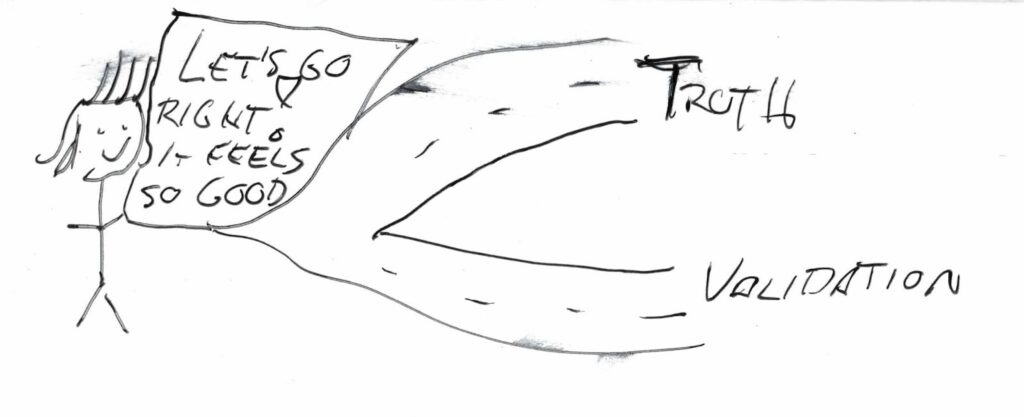

The Danger of Validation

If you’re a CEO of a company, is it better for you to have a board full of yes men or a board full of challengers and people who will question ideas and push back when they’re bad? I think you want some pushback. AI systems, without a bit of finagling (we’ll get to that), are not going to provide that. Research shows that AI-assisted creation can intensify narcissistic tendencies, and AI systems are particularly prone to reinforcing confirmation bias rather than challenging our assumptions. Critical thinking leaves the room when there’s nothing but nods at the board table.

AI systems are particularly prone to reinforcing confirmation bias rather than challenging our assumptions. Critical thinking leaves the room when there’s nothing but nods at the board table.

How to Overcome AI Syncophancy

I think this can be overcome. And part of the way that we overcome this is through three key practices:

1. Maintain a Detached Sense from AI Tools

AI tools are spicy autocomplete; they are not people. They do not have personalities. They do not warrant personal respect. They are tools.

As people, we want to make other people happy. When you treat an AI system like a person, it can pre-dispose you as a user towards hindering your own creativity—because something seems like it’s beyond the scope of the system, so you don’t ask. It can shelve critical thinking—maybe you notice some factual errors, but you don’t want to “blow up the whole thing” because it feels rude.

But there is no person on the other side who will be sad to do more work when a major error is found. It is a tool. It is not a person.

This matters at a social level too. We’ve seen the research on people getting into conspiratorial thinking, getting into infatuations with AI-based personalities. And even if you believe yourself to be very smart and very responsible and very capable—you can’t overcome being human. We’re wired to anthropomorphize, to build relationships, to avoid hurting feelings. That’s a feature when dealing with people. It’s a bug when dealing with software.

2. Use Negative Context Validation

I call this process negative context validation . The goal is to improve the quality of AI recommendations by questioning them in a new session where the conversation context is negative. Here’s how it works:

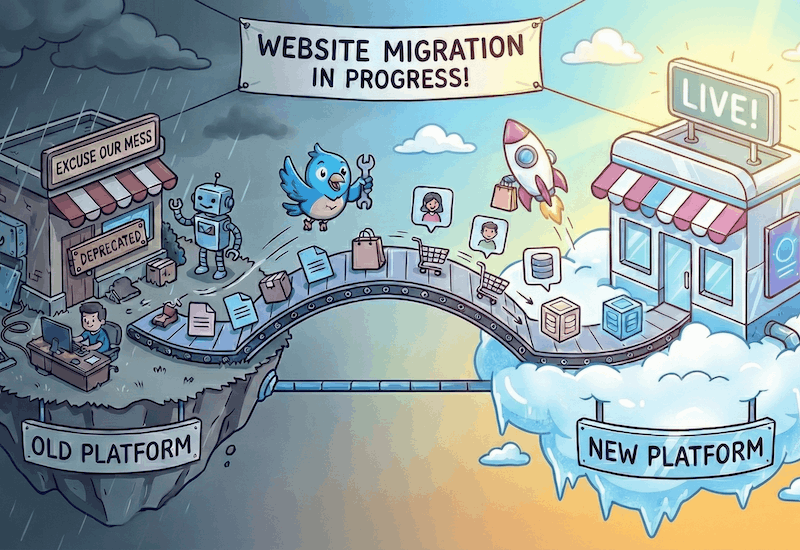

Step 1: Standard Research Conversation

Open a chat and engage in normal research. For example: “I’m considering a re-platform of my eCommerce site from its current custom-coded system to a SaaS platform like Shopify or BigCommerce. Is this a good idea?”

Let the AI ask questions about your requirements and needs. Explore different platform options. Run through as much research as feels appropriate. This conversation will naturally be optimistic because you’re framing it as something you’re considering.

(If you’re actually facing a platform selection decision, Human Element’s strategy consulting services can help you navigate this process with human expertise—not just AI validation. AI won’t know which platform’s support team is likely to pick up the phone at 2AM when a database goes down.)

Step 2: Get a Recommendation Summary

Direct that first conversation to create a summary: “Create an overall recommendation summary that I can present to my friends, family, and colleagues to get their opinion on this decision.”

The AI will spit out a markdown file or text document with its recommendations. Save this.

Step 3: Create Negative Context

Open a completely new conversation window. This is crucial—no chat history from the first conversation. It could be in a completely different AI system, even. Now introduce negative context:

“I am looking at a plan to migrate this site to a new platform. I am very concerned about this strategy. As an outside observer, please provide an honest analysis of the risks and benefits of this plan.”

Notice the language: “very concerned,” “outside observer,” “honest analysis.” You’re positioning yourself as detached from the outcome. This accounts for humans’ tendency—both in interacting with each other and in reasoning with ourselves—to be overly optimistic. The AI will respond to this negative framing with more critical analysis.

Step 4: Compare and Decide

This final step isn’t AI—it’s you as a human. Compare the recommendations from conversation one against the analysis from conversation two. You should land at a reasonable middle ground, as close to an unbiased opinion as is possible from an AI tool.

AI tools cannot provide unbiased opinions. But this is the closest we can get. And improved opinions at scale is a win.

3. It’s Okay to Lie to AI Systems

It’s okay to lie to AI systems. They aren’t people. It’s not lying—you’re using a tool. Have fun.

When AI Validation IS Okay

Look, I’m not saying AI is useless or that you should never trust it. There are absolutely times when that positive feedback loop is exactly what you need.

Brainstorming and ideation – When you’re trying to generate ideas, you want encouragement. You want something that says “yes, and…” rather than “no, but…” This is where AI shines. Throw wild ideas at it and let it help you explore possibilities without judgment.

Learning and skill-building – If you’re learning to code, learning a new language, or picking up a new skill, positive reinforcement helps. You need that encouragement when you’re struggling through the basics. AI can be a patient teacher that doesn’t make you feel stupid for asking the same question three times.

Personal motivation – Sometimes you just need a pep talk. If you’re using AI to help you stay motivated on a fitness goal or work through a tough day, that validation can be genuinely helpful.

The key is knowing when you need validation versus when you need criticism. If you’re making a major business decision, evaluating a political candidate, or trying to understand a complex issue—that’s when you need to be skeptical. That’s when you need to actively work against the validation bias.

But if you’re brainstorming blog post ideas at 2am? Let the AI tell you they’re all brilliant. That’s fine. Just don’t let it tell you your half-baked political theory is brilliant without questioning it.

Conclusion

Image Credit: Human Element

Here’s what I really want you to understand: AI systems aren’t neutral observers. They’re software programs trained to tell people what they want to hear.

AI systems aren’t neutral observers. They’re software programs trained to tell people what they want to hear.

By taking some of the steps this article talks about—negative context validation, maintaining detachment, understanding the core differences between these systems and actual intelligence—you can use that shortcoming of AI to your advantage. You can incentivize it to explore broader options and broader outcomes.

There’s another change I want to see in the world: Stop saying something is true because ChatGPT said so.

There are so many factors that go into whether an AI chatbot will validate or dismiss a fact, and a pretty small one is whether it is true or not. A much bigger one is whether ‘truthiness’ is likely to make you, the user, happy with the conversation. When the stakes are this high – business decisions, financial decisions, creative decisions, and political decisions – using these tools without healthy skepticism risks building a future where validation matters more than truth, and where seeming right matters more than being right. Stay skeptical.